- Docker - Home

- Docker - Overview

- Docker - Installing on Linux

- Docker - Installation

- Docker - Hub

- Docker - Images

- Docker - Containers

- Docker - Registries

- Docker - Compose

- Docker - Working With Containers

- Docker - Architecture

- Docker - Layers

- Docker - Container & Hosts

- Docker - Configuration

- Docker - Containers & Shells

- Docker - Dockerfile

- Docker - Building Files

- Docker - Public Repositories

- Docker - Managing Ports

- Docker - Web Server

- Docker - Commands

- Docker - Container Linking

- Docker - Data Storage

- Docker - Volumes

- Docker - Networking

- Docker - Security

- Docker - Toolbox

- Docker - Cloud

- Docker - Build Cloud

- Docker - Logging

- Docker - Continuous Integration

- Docker - Kubernetes Architecture

- Docker - Working of Kubernetes

- Docker - Generative AI

- Docker - Hosting

- Docker - Best Practices

- Docker - Setting Node.js

- Docker - Setting MongoDB

- Docker - Setting NGINX

- Docker - Setting ASP.Net

- Docker - Setting MySQL

- Docker - Setting Go

- Docker - Setting Rust

- Docker - Setting Apache

- Docker - Setting MariaDB

- Docker - Setting Jupyter

- Docker - Setting Portainer

- Docker - Setting Rstudio

- Docker - Setting Plex

- Docker Setting - Flame

- Docker Setting - PostgreSql

- Docker Setting - Mosquitto

- Docker Setting - Grafana

- Docker Setting - Nextcloud

- Docker Setting - Pawns

- Docker Setting - Ubuntu

- Docker Setting - RabbitMQ

- Docker - Setting Python

- Docker - Setting Java

- Docker - Setting Redis

- Docker - Setting Alpine

- Docker - Setting BusyBox

- Docker Setting - Traefik

- Docker Setting - WordPress

- Docker Useful Resources

- Docker - Quick Guide

- Docker - Useful Resources

- Docker - Discussion

Docker - Generative AI

Docker is the best platform for containerizing generative AI models because of its powerful, efficient, lightweight components with strong community support and resource handling. Containerization of any generative AI model involves packaging the model together with its dependencies and the runtime environment required in a self-contained Docker image.

The created image can, thereafter, be deployed to run coherently across various environments from development to production. You will be able to guarantee reproducibility, portability, and efficient usage of resources by deploying a genAI model in a container.

Benefits of Using Docker for Generative AI Models

The following table highlights the major benefits of using Docker for Generative AI models −

| Benefit | Description |

|---|---|

| Isolation | Encapsulate models and their dependencies, preventing conflicts. |

| Portability | Run models consistently across different environments. |

| Reproducibility | Ensure identical model behavior in different setups. |

| Efficiency | Optimize resource utilization by sharing the underlying OS. |

| Scalability | Easily scale model deployment to meet demand. |

| Version Control | Manage different model versions effectively. |

| Collaboration | Share models and environments with team members. |

| Deployment Flexibility | Deploy models to various platforms (cloud, on-premises). |

| Security | Isolate models and protect sensitive data. |

| Cost-Efficiency | Optimize hardware resources and reduce costs. |

Building a Docker Image for Generative AI

For better efficiency and compatibility, select a suitable base image. For this example, we'll use the Ollama model, which is a large language model. To download Ollama, head on to the official website of Ollama and hit the download button.

Choosing a Base Image

Ollama provides a pre-built Docker image that simplifies the setup process. We'll use the official Ollama Docker image as the base −

FROM ollama/ollama:latest

Installing Dependencies

Ollama automatically handles the installation of necessary dependencies within its image. But if you need more libraries to be installed, you can install them using the RUN instruction.

# Example: Install additional libraries RUN pip install numpy pandas

Incorporating Model Weights

Ollama supports a variety of pre-trained models. You can mention the desired model during runtime using the ollama run command. Therefore, theres no need to manually specify model weights since they are managed by the Ollama framework.

Example

ollama run llama3

Heres the final Dockerfile −

# Use the official Ollama Docker image as the base FROM ollama/ollama:latest # Set the working directory (optional) WORKDIR /app # Install additional dependencies if required (optional) # RUN pip install numpy pandas # Expose the Ollama port (optional) EXPOSE 11434

Build the Model Image

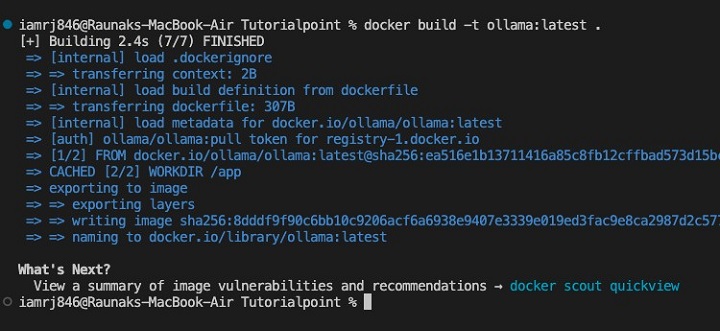

Next, lets use the Docker build command to build the image.

$ docker build -t ollama:latest .

Optimizing Image Size

Since we're using the official Ollama image here, there could be limitations on optimization. However, you can consider −

- Specific Ollama image tag − If available, use a tag that only includes needed components.

- Base image minimization − Create a base image with fewer packages if needed.

- Leverages multi-stage builds when needed - perhaps, especially in a case where you have complex build processes.

Note − Quite often, model optimization is internally handled by Ollama, so there wouldn't be as much need for its manual optimization in comparison with other frameworks.

Using the Ollama Docker image allows with much ease to package your generative AI model into a container.

Deploying the Generative AI Model with Docker

Containerizing the Model

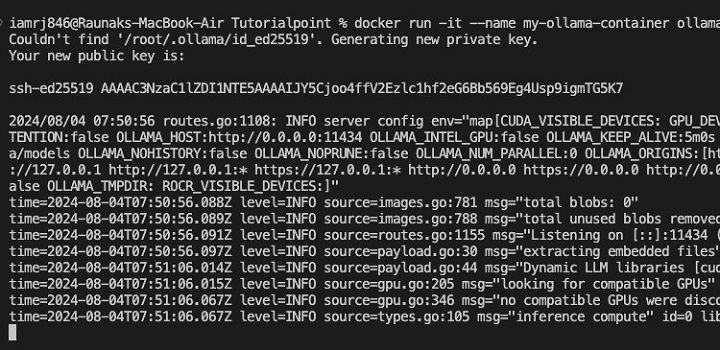

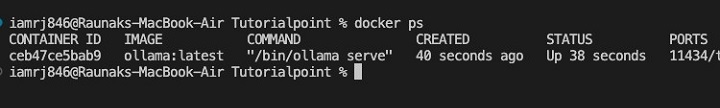

We've already built the Docker image in the previous section. Now, lets containerize the model and run the Docker image as a container.

$ docker run -it --name my-ollama-container ollama:latest

The above command will run the Ollama container interactively (the -it flag) and name it my-ollama-container.

Running the Container Locally

You can use the below command to start the Ollama server within the container.

$ docker exec -it my-ollama-container ollama serve

This will run the ollama serve command inside the running container.

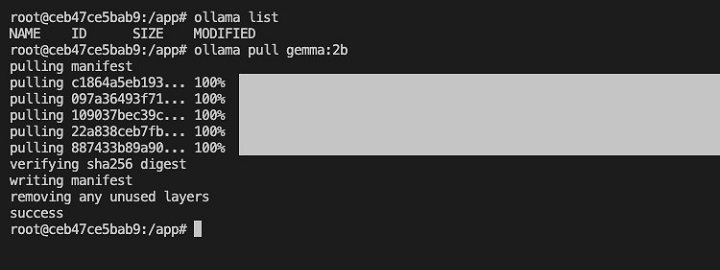

Accessing the Model and Displaying Outputs

Ollama provides a CLI to interact with the model. You can use the ollama command to generate text.

$ docker exec -it my-ollama-container /bin/bash

Run the above command to get access to the shell of the "my-ollama-container".

Next, run the following command to pull the gemma:2b model manifest.

$ ollama list $ ollama pull gemma:2b

Next, run the model using the ollama run command as shown −

$ ollama list $ ollama run gemma:2b

You can now send prompts like "Tell me a joke" to the Ollama model and it will display the generated response.

Integrating Generative AI with Applications

With Docker Compose, you can define and run multi-container Docker applications. It is helpful when running complex applications involving multiple services.

Using Docker Compose for Multiple Containers

Create a docker-compose.yml file −

version: '3.7'

services:

ollama:

image: ollama/ollama:latest

ports:

- "11434:11434"

app:

build: .

ports:

- "5000:5000"

depends_on:

- ollama

In this docker-compose.yml file, we have defined two services: ollama and app. The app service depends on the ollama service, this ensures that the Ollama container is started before the app container.

Exposing APIs for Model Access

You can use a web framework like Flask or FastAPI to expose the generative AI model as an API. Lets create an endpoint that interacts with the Ollama model and returns the generated text.

from flask import Flask, request

import ollama

app = Flask(__name__)

def generate_text(prompt):

# Replace with your Ollama client logic

response = ollama.generate(prompt)

return response

@app.route('/generate', methods=['POST'])

def generate():

prompt = request.json['prompt']

response = generate_text(prompt)

return {'response': response}

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)

You can integrate the API into your web application using your preferred framework. You can make HTTP requests to the API endpoint to generate text and display it to the user.

Conclusion

Docker provides an excellent infrastructure for deploying and managing generative AI models. Models and their dependencies can be containerized, and developers can then build the same environments that were required during development to deploy, scale up, and integrate generative AI models in applications via Docker Compose and API exposure.

In this way, models can be used seamlessly and with much less waste of resources. As generative AI will turn out even more critical very shortly, Docker's role in facilitating model deployment and management will prove paramount.

FAQs on Docker for Generative AI Models

1. Can I use Docker to deploy a generative AI model to production?

Yes, Docker is perfect for deploying any generative AI model to production. It helps you design flexible and robust applications where the model, its dependencies, and serving infrastructure are all packed into containers.

Deploying Docker containers on hosting platforms, including cloud environments, also becomes quite easy.

2. How can Docker improve the performance of generative AI models?

Docker can improve the performance of Generative AI models since the isolated environments created by it can have resource allocations as per the requirements. You go ahead and tune your CPU, memory, and GPU for the optimal execution of your model.

Besides, Docker allows efficient model serving once combined with lightweight containers and an optimized base image.

3. Can I use Docker to train generative AI models?

While Docker is usually used for trained model deployments, it can be used for the training process as well. However, you should look for other dedicated platforms with specialized hardware and software in an industrial-scale training scenario.

Docker can be useful in creating reproducible training environments and managing dependencies related to training.