- Kubernetes Tutorial

- Kubernetes - Home

- Kubernetes - Overview

- Kubernetes - Architecture

- Kubernetes - Setup

- Kubernetes - Setup on Ubuntu

- Kubernetes - Images

- Kubernetes - Jobs

- Kubernetes - Labels & Selectors

- Kubernetes - Namespace

- Kubernetes - Node

- Kubernetes - Service

- Kubernetes - POD

- Kubernetes - Replication Controller

- Kubernetes - Replica Sets

- Kubernetes - Deployments

- Kubernetes - Volumes

- Kubernetes - Secrets

- Kubernetes - Network Policy

- Advanced Kubernetes

- Kubernetes - API

- Kubernetes - Kubectl

- Kubernetes - Kubectl Commands

- Kubernetes - Creating an App

- Kubernetes - App Deployment

- Kubernetes - Autoscaling

- Kubernetes - Dashboard Setup

- Kubernetes - Helm Package Management

- Kubernetes - CI/CD Integration

- Kubernetes - Persistent Storage and PVCs

- Kubernetes - RBAC

- Kubernetes - Logging & Monitoring

- Kubernetes - Service Mesh with Istio

- Kubernetes - Backup and Disaster Recovery

- Managing ConfigMaps and Secrets

- Running Stateful Applications

- Kubernetes Useful Resources

- Kubernetes - Quick Guide

- Kubernetes - Useful Resources

- Kubernetes - Discussion

Logging and Monitoring with Prometheus and Grafana

Monitoring and logging are essential for managing applications in Kubernetes. Without proper visibility into our cluster's health and performance, troubleshooting issues becomes challenging. This is where Prometheus and Grafana come into play. Prometheus is a powerful monitoring system designed for Kubernetes, while Grafana provides rich visualization capabilities.

In this chapter, we'll explore how to set up Prometheus and Grafana in Kubernetes, collect metrics, visualize data, and configure alerts to ensure proactive monitoring. By the end, we'll have a fully functional monitoring system integrated into our Kubernetes environment.

Why Monitoring Matters

As applications grow in complexity, monitoring helps us −

- Detect Performance Issues − Spot slow responses, memory leaks, or high CPU usage before users experience problems.

- Ensure System Reliability − Maintain uptime by detecting failures early.

- Analyze Trends − Understand how our application behaves over time.

- Alert on Issues − Receive notifications when critical metrics exceed thresholds.

Understanding Prometheus

Prometheus is an open-source monitoring system specifically designed for dynamic cloud environments like Kubernetes. It works by scraping metrics from instrumented applications and storing them in a time-series database. Prometheus provides −

- Multi-dimensional data model with key-value labels

- Powerful query language (PromQL) for data analysis

- Pull-based model where Prometheus scrapes metrics from targets

- Built-in alerting via Alertmanager

- Integration with Kubernetes Service Discovery

Prometheus Key Concepts

- Targets: The services Prometheus scrapes metrics from (e.g., Kubernetes nodes, pods, applications).

- Jobs: Groups of targets defined in the configuration.

- Metrics: Time-series data collected from targets.

- PromQL (Prometheus Query Language): Used to query and analyze stored metrics.

- Alerting rules: Conditions defined to trigger alerts.

Understanding Grafana

Grafana is a visualization tool that transforms raw metrics into rich dashboards. It connects to Prometheus and provides −

- Interactive charts, graphs, and tables

- Custom dashboards for real-time monitoring

- Alerting and notifications via email, Slack, or other integrations

- Support for multiple data sources (Prometheus, Loki, Elasticsearch, etc.)

Setting Up Prometheus and Grafana in Kubernetes

To get started, we will deploy Prometheus and Grafana in our Kubernetes cluster. The easiest way to install them is by using Helm, a package manager for Kubernetes.

Prerequisites

Before we begin, we'll ensure that:

- A Kubernetes cluster is up and running.

- kubectl is configured to communicate with the cluster.

- Helm is installed on our system.

Install Prometheus and Grafana

We'll start by adding the Prometheus Helm Repository −

$ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

Output

"prometheus-community" has been added to your repositories

Update the Helm Repository −

$ helm repo update

Output

Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "prometheus-community" chart repository ...Successfully got an update from the "bitnami" chart repository Update Complete. Happy Helming!

Install Prometheus Stack using Helm −

$ helm install prometheus prometheus-community/kube-prometheus-stack --namespace monitoring --create-namespace

Output

NAME: prometheus

LAST DEPLOYED: Mon Mar 10 11:14:18 2025

NAMESPACE: monitoring

STATUS: deployed

REVISION: 1

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace monitoring get pods -l "release=prometheus"

Get Grafana 'admin' user password by running:

kubectl --namespace monitoring get secrets prometheus-grafana

-o jsonpath="{.data.admin-password}" | base64 -d ; echo

Access Grafana local instance:

export POD_NAME=$(kubectl --namespace monitoring get pod -l

"app.kubernetes.io/name=grafana,app.kubernetes.io/instance=prometheus" -oname)

kubectl --namespace monitoring port-forward $POD_NAME 3000

This installs Prometheus, Grafana, and Alertmanager under the monitoring namespace.

Verify that Prometheus Pods are Running −

$ kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE alertmanager-prometheus-kube-prometheus-alertmanager-0 0/2 Init:0/1 0 76s prometheus-grafana-54d864bf96-t2qjr 0/3 ContainerCreating 0 3m30s prometheus-kube-prometheus-operator-dd4b85cc8-qjjmq 1/1 Running 0 3m30s prometheus-kube-state-metrics-888b7fd55-lv5mq 1/1 Running 0 3m30s prometheus-prometheus-kube-prometheus-prometheus-0 0/2 Init:0/1 0 76s prometheus-prometheus-node-exporter-wvgxw 1/1 Running 0 3m30s

This confirms that some of the required services are running, while others are still initializing.

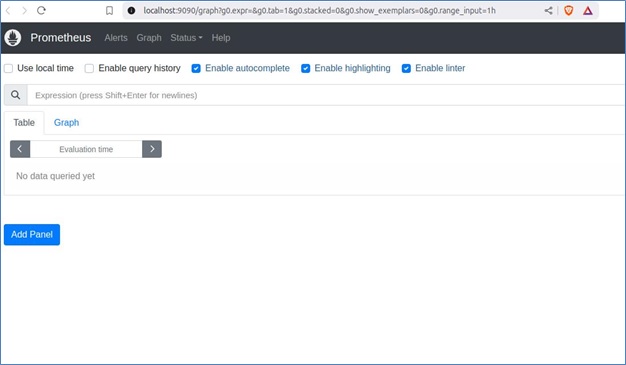

Access the Prometheus UI

To access this UI, we'll first port-forward the Prometheus pod −

$ kubectl port-forward prometheus-prometheus-kube-prometheus-prometheus-0 9090 -n monitoring

Output

Forwarding from 127.0.0.1:9090 -> 9090 Forwarding from [::1]:9090 -> 9090

Now, we can visit http://localhost:9090 in our browser to access the Prometheus UI.

Access the Grafana UI

Well first port-forward the Grafana pod with the following command −

$ kubectl port-forward prometheus-grafana-54d864bf96-t2qjr 3000 -n monitoring

Output

Forwarding from 127.0.0.1:3000 -> 3000 Forwarding from [::1]:3000 -> 3000

Now, we can open http://localhost:3000 in a web browser and log in using −

- Username: admin

- Password: prom-operator (kubectl --namespace monitoring get secrets prometheus-grafana -o jsonpath="{.data.admin-password}" | base64 -d ; echo)

Collecting Metrics in Kubernetes

Prometheus automatically discovers and scrapes Kubernetes components, including:

- Node metrics (CPU, Memory, Disk Usage)

- Pod and container metrics (Resource consumption, restarts, errors)

- Kubernetes API metrics (Event logs, scheduler stats)

To manually expose metrics from an application, we can use an Exporter.

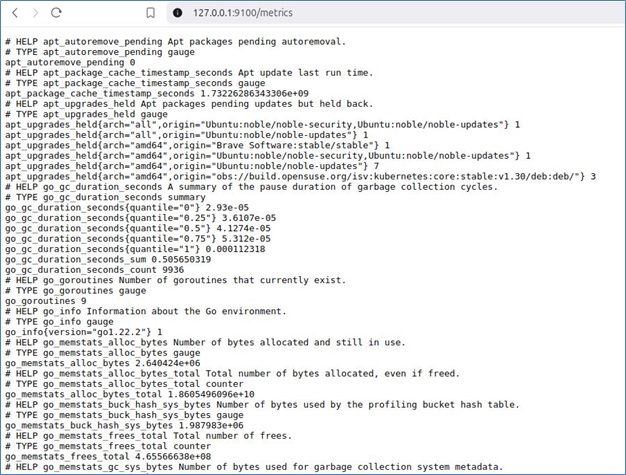

Example: Node Exporter (Collecting System Metrics)

$ helm install node-exporter prometheus-community/prometheus-node-exporter --namespace monitoring

Output

NAME: node-exporter

LAST DEPLOYED: Mon Mar 10 13:42:25 2025

NAMESPACE: monitoring

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace monitoring -l

"app.kubernetes.io/name=prometheus-node-exporter,app.kubernetes.io/instance=node-exporter" -o

jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:9100 to use your application"

kubectl port-forward --namespace monitoring $POD_NAME 9100

Expose Node Exporter on Port 9100:

$ export POD_NAME=$(kubectl get pods --namespace monitoring -l

"app.kubernetes.io/name=prometheus-node-exporter,app.kubernetes.io/instance=node-exporter" -o

jsonpath="{.items[0].metadata.name}")

$ kubectl port-forward --namespace monitoring $POD_NAME 9100

Visit http://127.0.0.1:9100 to see system metrics.

This collects host-level system metrics, including CPU, memory, disk usage, filesystem statistics, and network activity.

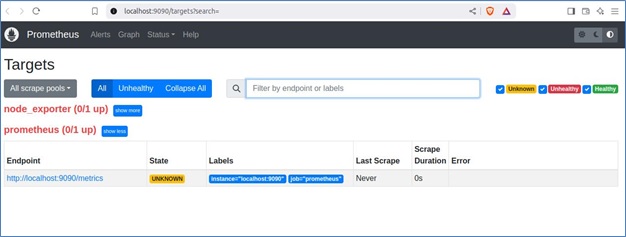

Monitoring Targets in Prometheus

Prometheus collects metrics from various sources, known as targets. These targets can include the Prometheus server itself, Node Exporter, application instances, and other services exposing metrics in a Prometheus-compatible format.

When Prometheus scrapes a target, it assigns one of the following states:

- Up(Healthy): The target is active and responding to Prometheus scrapes.

- Down (Unhealthy): The target is unreachable, or Prometheus cannot retrieve metrics.

- Unknown: The target has not been scraped yet or is misconfigured.

Key Details Displayed on the Targets Page

- Endpoint − The URL where Prometheus scrapes metrics.

- State − Whether the target is up, down, or unknown.

- Labels − Identifiers used for querying and grouping in Prometheus.

- Last Scrape − The last time Prometheus successfully scraped the target.

- Scrape Duration − The time taken to collect the metrics.

- Error − If Prometheus fails to scrape a target, an error message is displayed.

Configuring Targets in Prometheus

Targets are defined in the Prometheus configuration file (prometheus.yml) under the scrape_configs section.

Here is an example configuration for monitoring both Prometheus itself and Node Exporter:

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'node_exporter'

static_configs:

- targets: ['localhost:9100']

Each job_name represents a monitored service, and targets specify the endpoints Prometheus scrapes.

Viewing Target Metrics

Once configured, visit http://localhost:9090/targets to check the status of each target. Clicking on an endpoint will display the raw metrics exposed by the service. These metrics can then be queried in Prometheus and visualized in tools like Grafana for better insights.

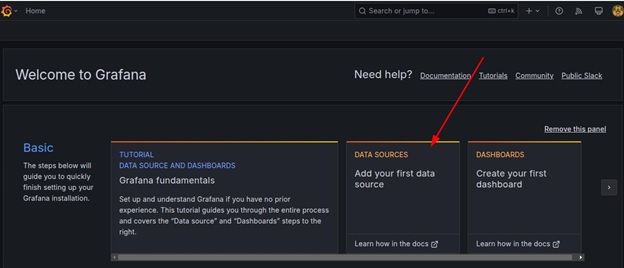

Visualizing Metrics with Grafana

Let's now understand how to visualize metrics with Grafana:

Connecting Prometheus to Grafana

To get started, log in to Grafana by accessing http://localhost:3000 and using the admin credentials.

Next, navigate to the welcome page and go to Data Sources.

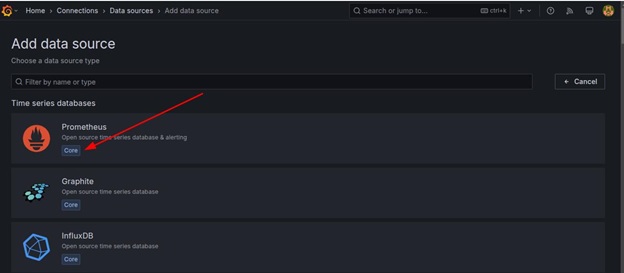

Click Add Data Source and select Prometheus as the data source type.

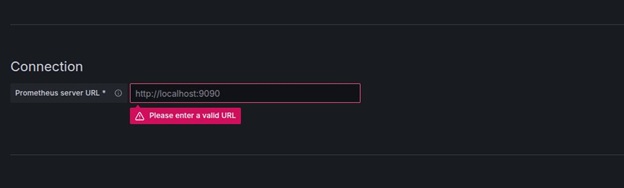

In the URL field, enter http://localhost:9090 as the Prometheus server URL.

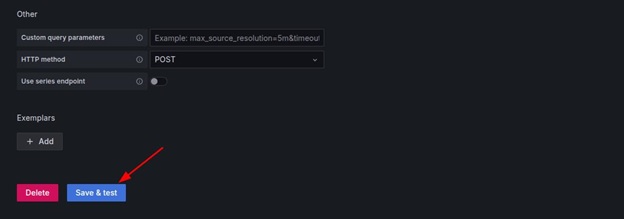

Click Save & Test.

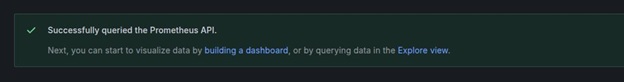

The following output confirms a successful connection:

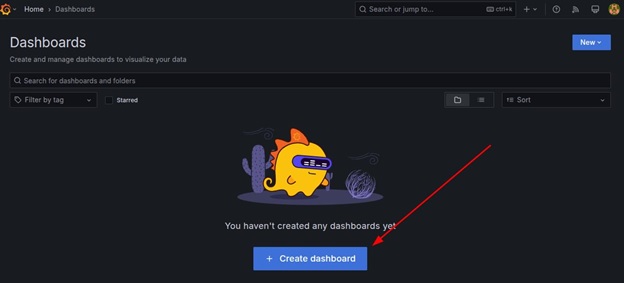

Creating a Grafana Dashboard with Custom Visualizations

To achieve this, start by clicking Create → Dashboard.

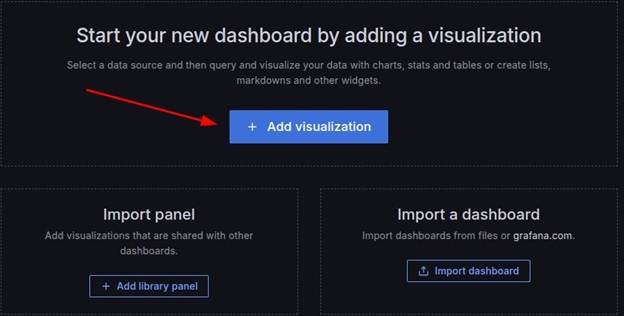

Next, click the Add visualization button.

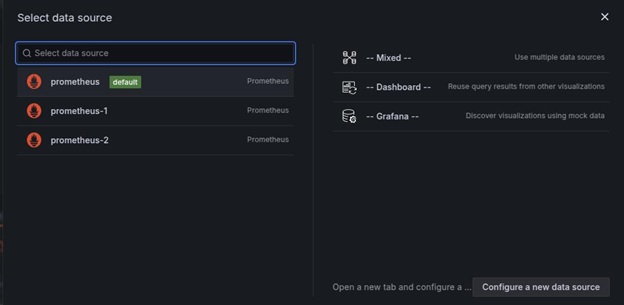

Select prometheus (default) as the data source.

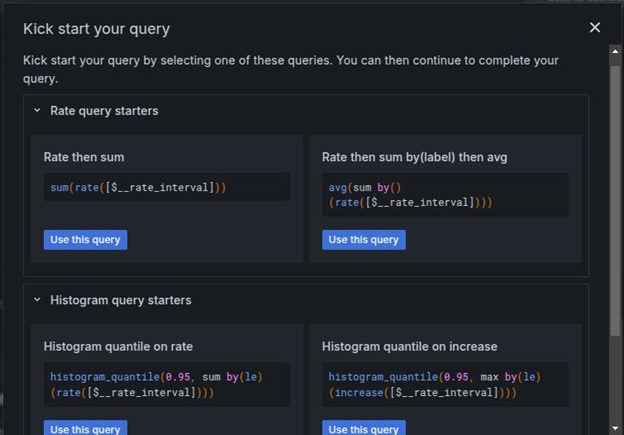

In the Query section, click kick start your query button to choose the query type. Use the Rate then sum as the query.

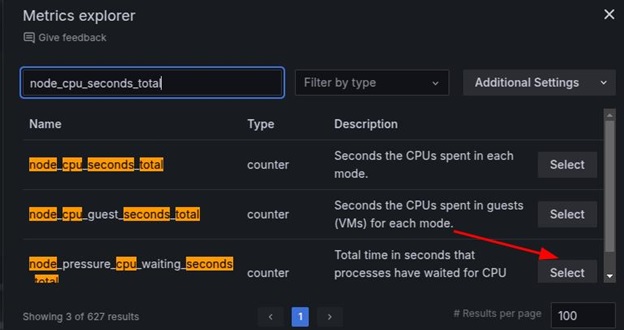

Then, input the query, for instance, node_cpu_seconds_total, and click the relevant option.

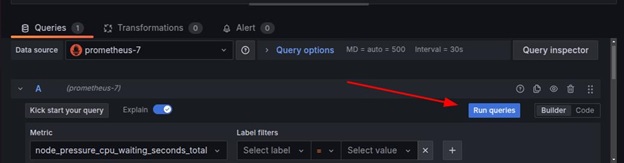

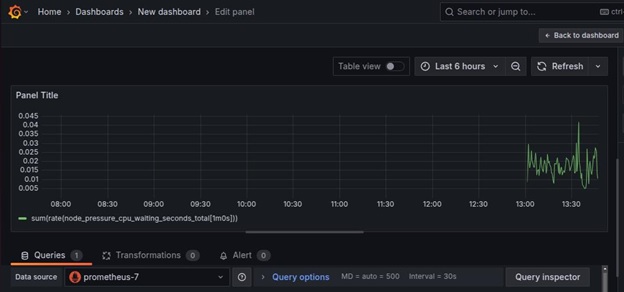

Choose time series as the visualization type (e.g., Graph, Gauge, Table, or Heatmap), and complete the query by clicking run queries.

Now, the raw data can be visualized.

Additional Example Queries for Visualization

- CPU Usage per Pod: sum(rate(container_cpu_usage_seconds_total{namespace="monitoring"}[5m])) by (pod)

- Memory Usage per Node: sum(container_memory_usage_bytes) by (node)

- Disk I/O Reads per Container: rate(container_fs_reads_bytes_total[5m])

- Network Traffic per Pod: sum(rate(container_network_receive_bytes_total[5m])) by (pod)

Importing a Pre-built Kubernetes Dashboard in Grafana

Grafana allows importing dashboards using JSON files, which makes it easy to reuse pre-configured visualizations. To import a dashboard, follow these steps:

Obtain a JSON Dashboard File

Download pre-built Kubernetes monitoring dashboards from Grafana's official website or export an existing dashboard as a JSON file.

Import the JSON File in Grafana

- Go to Dashboards → Import.

- Click Upload JSON file and select your pre-configured dashboard file.

- Alternatively, paste the JSON content into the Import via JSON

Select Data Source

Choose the correct Prometheus data source to ensure the imported dashboard retrieves the expected metrics.

Import the Dashboard

Click Import to load a detailed Kubernetes monitoring dashboard.

Once imported, customize the dashboard by adding additional panels or adjusting alert thresholds.

Configuring Alerts in Prometheus

To receive alerts when issues occur, we can configure Alertmanager.

Create an Alerting Rule

Save the following as alerts.yaml.

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

name: high-cpu-alert

namespace: monitoring

spec:

groups:

- name: example.rules

rules:

- alert: HighCPUUsage

expr: (100 - (avg by(instance) (rate(node_cpu_seconds_total{mode="idle"}[5m])) * 100)) > 80

for: 2m

labels:

severity: warning

annotations:

summary: "High CPU usage detected"

description: "CPU usage has exceeded 80% for more than 2 minutes"

Apply the rule:

$ kubectl apply -f alerts.yaml

Output

prometheusrule.monitoring.coreos.com/high-cpu-alert created

This confirms that Prometheus has successfully added the alert rule.

Managing Alerts in Grafana

Let's now understand how to manage alerts in Grafana:

Edit the Prometheus Alert Rules:

groups:

- name: instance-down

rules:

- alert: InstanceDown

expr: up == 0

for: 5m

labels:

severity: critical

annotations:

summary: "Instance {{ $labels.instance }} is down"

Apply the alert configuration:

$ kubectl apply -f prometheus-rules.yaml

Output

prometheusrule.monitoring.coreos.com/instance-down created

Setting Up Alerts in Grafana

Open Grafana:

- Go to Alerting → Contact Points.

- Create a new contact point (e.g., Email, Slack, PagerDuty).

- Configure the recipient details.

Define a New Alert Rule:

- Go to Alerting → New Alert Rule.

- Choose a metric query (e.g., node_cpu_seconds_total).

- Set conditions (e.g., CPU usage > 80%).

- Define notification policies to link alerts with contact points.

Test and Activate the Alert:

- Click Test Rule to validate.

- Save and enable the rule to start monitoring.

Alert status changes from Pending → Firing when conditions are met. In addition, notifications are sent to the configured contact points when an alert triggers. This ensures that both Prometheus and Grafana provide efficient alerting mechanisms for monitoring Kubernetes infrastructure.

Troubleshooting Common Issues

We have highlighted here some of the common issues faced in monitoring and how to troubleshoot such issues.

Prometheus Not Scraping Metrics

- Check if the target is correctly discovered: kubectl get servicemonitors -n monitoring

- Inspect Prometheus logs: kubectl logs -l app=prometheus -n monitoring

Grafana Dashboards Not Displaying Data

- Verify the Prometheus data source connection.

- Check PromQL queries for correct syntax.

- Inspect Grafana logs: kubectl logs -l app=grafana -n monitoring

Alerts Not Firing

- Confirm alert rules are correctly loaded: kubectl get prometheusrules -n monitoring

- Test alerts manually: kubectl exec -it prometheus-0 -n monitoring -- promtool check rules /etc/prometheus/rules.yaml

Best Practices for Monitoring in Kubernetes

Listed below are some of the best practices for monitoring in Kubernetes:

- Use Dashboards − Regularly review Grafana dashboards to track key metrics.

- Set Alerts − Configure alerts to notify you of abnormal conditions.

- Optimize Storage − Ensure Prometheus retention settings are configured correctly to avoid excessive data usage.

- Secure Access − Restrict access to monitoring tools to avoid unauthorized access.

Conclusion

Monitoring and logging are crucial for maintaining a healthy Kubernetes environment. Prometheus and Grafana provide powerful capabilities for collecting, analyzing, and visualizing metrics. By setting up Prometheus for metric collection and Grafana for visualization, we gain deep insights into our cluster’s health, helping us detect and resolve issues efficiently.

By implementing these tools, we ensure that our Kubernetes workloads run smoothly and any potential issues are addressed before they impact users.