- OS - Home

- OS - Needs

- OS - Overview

- OS - History

- OS - Components

- OS - Structure

- OS - Architecture

- OS - Services

- OS - Properties

- OS - TAT & WAT

- OS Processes

- OS - Processes

- OS - Process Scheduling

- OS - Scheduling Algorithms

- FCFS Scheduling Algorithm

- SJF Scheduling Algorithm

- Round Robin Scheduling Algorithms

- HRRN Scheduling Algorithms

- Priority Scheduling Algorithms

- Multilevel Queue Scheduling

- Context Switching

- Operations on Processes

- Lottery Process Scheduling

- Predicting Burst Time SJF Scheduling

- Race Condition Vulnerability

- Critical Section Synchronization

- Mutual Exclusion Synchronization

- Process Control Block

- Inter Process Communication

- Preemptive and Non-Preemptive Scheduling

- Operating System - Deadlock

- Introduction to Deadlock in Operating System

- Conditions for Deadlock in Operating System

- OS Synchronization

- Operating System - Process Synchronization

- Operating System - Critical Section

- Operating System - Semaphores

- Operating System - Counting Semaphores

- Operating System - Mutex

- Operating System - Lock Variable in Process Synchronization

- Operating System - Turn Variable in Process Synchronization

- Operating System - Bounded Buffer Problem

- Operating System - Reader Writer Locks in Process Synchronization

- Operating System - Test Set Lock in Process Synchronization

- Operating System - Peterson Solution in Process Synchronization

- Operating System - Monitors in Process Synchronization

- Operating System - Sleep and Wake in Process Synchronization

- OS Memory Management

- OS - Memory Management

- OS - Virtual Memory

- OS Storage Management

- File Systems in Operating System

- Linked Index Allocation in Operating System

- Indexed Allocation in Operating System

- Structures of Directory in Operating System

- File Attributes in Operating System

- Operating System - Page Replacement

- Operating Systems - Thrashing

- Belady’s Anomaly in Page Replacement Algorithms

- Optimal Page Replacement Algorithm

- Operating System - Types

- Types of Operating System

- Batch Processing Operating System

- Multiprocessing Operating System

- Hybrid Operating System

- Monolithic Operating System

- Zephyr Operating System

- Nix Operating System

- Blackberry Operating System

- Garuda Operating System

- Tails Operating System

- Clustered Operating System

- Haiku Operating System

- AIX Operating System

- Solus Operating system

- Tizen Operating System

- Bharat Operating System

- Fire Operating System

- Bliss Operating System

- VxWorks Operating System

- Embedded Operating System

- Single User Operating System

- OS Miscellaneous

- OS - Multi-threading

- OS - I/O Hardware

- OS - I/O Software

- OS - Security

- OS - Linux

- OS Useful Resources

- OS - Quick Guide

- OS - Useful Resources

- OS - Discussion

Critical Section in Synchronization

What is Critical Section?

A critical section denotes a segment of code in a process where the process changes the value or state of any shared resource. The shared resources refer to shared memory variables, common tables, shared files, shared devices, or any other system resource.

The segment of code is called a "critical section" because if multiple processes attempt to manipulate a shared resource concurrently, the outcome becomes inconsistent due to a phenomenon called race condition.

The Critical Section Problem

The critical section problem is to design a protocol such that when one process is executing in its critical section, other processes are not allowed to execute in their critical sections simultaneously, thereby avoiding race conditions occurring.

To achieve this cooperation, each process must request permission to enter its critical section. The section of code where a process does so is called the entry section. If the process is granted permission, it enters the critical section, where it updates the values of the shared resource.

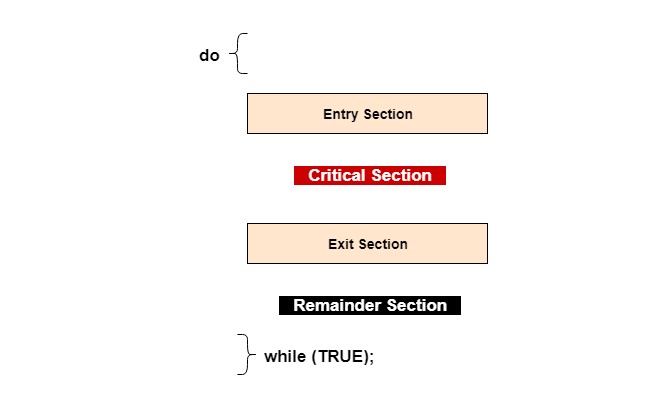

After the critical section, the process goes through the exit section, in which it relinquishes its control over the shared resource and announces this to the other processes in the system. The process then carries on executing its remaining statements. The following figure shows the general structure of a process having a critical section.

Requirements for Solution to Critical Section Problem

Any solution to the critical section problem needs to satisfy three requirements as follows −

1. Mutual Exclusion

Mutual exclusion implies that only one process can be inside the critical section at any time. If other processes need to execute in their critical sections, they must wait until it is free.

2. Progress

When no process is currently in its critical section and some processes want to enter their critical sections, only the processes not in their remainder sections can be involved in deciding which process can enter its critical section next, and this decision cannot be delayed indefinitely. Progress ensures that there is fair arbitration among the processes so that the processes continue with their execution.

3. Bounded Waiting

Bounded waiting means that each process must have a limited waiting time. It should not wait endlessly to access the critical section. It sets a bound to the number of times other processes can gain access to the critical section when a process requests permission for its critical section.

Algorithms and Methods to Solve Critical Section Problem

In order to tackle the critical section problem, a number of algorithms and methods have been developed. Some of the most common solutions are listed below.

1. Petersons Solution

The Peterson's solution is a classical software-based approach to ensure mutual exclusion among concurrent processes. It enables two processes to access a shared resource without conflict, relying on shared memory for communication. This algorithm uses two shared variables, namely flag to indicate interest of one process in entering the critical section and turn to determine priority if both processes are interested.

2. Test-and-Set

This approach uses a shared boolean variable known as "lock" such that lock = true indicates that a process is in its critical section. The entry section of each process has an atomic function "test-and-set", which checks the value of lock. If lock is true, it waits. Otherwise, it sets lock to true and enters the critical section. In the exit section, lock is reset to false.

3. Semaphores

Semaphore is an advanced synchronization tool. It uses two atomic operations, "wait()" and "signal()". The wait() instruction is used in the entry section to gain access to the critical section, while the signal() operation is used to release control of the shared resource. Semaphores can be of two types, binary semaphores and counting semaphores.

4. Compare-and-Swap

This approach is similar to the "test-and-set" method. It uses a shared boolean variable whose value is tested and set using the "compare_and_swap" instruction. The lock is set to true only if the value passed to it matches a certain value.

5. Mutex Locks

The term mutex is derived from mutual exclusion. It uses locks which are manipulated using the atomic functions "acquire()" and "release()" in the entry section and the exit section respectively. Only one process can acquire the lock at a time and so only one process can gain access to the critical section at a time.

Approaches to Critical Section Problem in OS Kernel

As in user processes, many kernel processes also execute concurrently that may attempt to update values of kernel data structures. Kernel data are shared data structures that include process lists, memory allocations, list of open files, interrupt handling and the like. The attempts to update these values cause a high probability of several race condition situations. OS race conditions are more crucial than those occurring in user processes and may result in system crash. As a consequence, kernel developers need meticulous design strategies to avoid kernel race conditions.

Kernels in operating systems are broadly categorized as preemptive and non-preemptive ones. Let us look into the general approaches to tackle race conditions in either of them.

Non-preemptive Kernels

Non-preemptive kernels are inherently free from race conditions. Here, a process running in kernel mode cannot be preempted by any other process. It will continue to run until it exits, blocks, or voluntarily gives up control of the CPU. Since only one process is active in the kernel at any given time, non-preemptive kernels are not prone to race conditions on kernel data structures.

Preemptive Kernels

In preemptive kernels, an executing kernel process may be stopped halfway and another process may start to run. This may corrupt the values of the kernel data structures. Preemptive kernels are essential for real time systems for their greater responsiveness and throughput, and consequently cant be replaced by the non-preemptive counterparts. So they require adequate measures to handle kernel critical sections.

A common solution is to use the spinlock code. When a kernel process holds the spinlock, preemption or interrupt is temporarily disabled on the corresponding processor. The process executes its critical section code and then releases the spinlock. Once the spinlock is released, interrupts are enabled again. Since disabling interrupts may have a number of adverse consequences, the goal is to minimize the time of holding a spinlock by any process.