- Computer Graphics - Home

- Computer Graphics Basics

- Computer Graphics Applications

- Graphics APIs and Pipelines

- Computer Graphics Maths

- Sets and Mapping

- Solving Quadratic Equations

- Computer Graphics Trigonometry

- Computer Graphics Vectors

- Linear Interpolation

- Computer Graphics Devices

- Cathode Ray Tube

- Raster Scan Display

- Random Scan Device

- Phosphorescence Color CRT

- Flat Panel Displays

- 3D Viewing Devices

- Images Pixels and Geometry

- Color Models

- Line Generation

- Line Generation Algorithm

- DDA Algorithm

- Bresenham's Line Generation Algorithm

- Mid-point Line Generation Algorithm

- Circle Generation

- Circle Generation Algorithm

- Bresenham's Circle Generation Algorithm

- Mid-point Circle Generation Algorithm

- Ellipse Generation Algorithm

- Polygon Filling

- Polygon Filling Algorithm

- Scan Line Algorithm

- Flood Filling Algorithm

- Boundary Fill Algorithm

- 4 and 8 Connected Polygon

- Inside Outside Test

- 2D Transformation

- 2D Transformation

- Transformation Between Coordinate System

- Affine Transformation

- Raster Methods Transformation

- 2D Viewing

- Viewing Pipeline and Reference Frame

- Window Viewport Coordinate Transformation

- Viewing & Clipping

- Point Clipping Algorithm

- Cohen-Sutherland Line Clipping

- Cyrus-Beck Line Clipping Algorithm

- Polygon Clipping Sutherland–Hodgman Algorithm

- Text Clipping

- Clipping Techniques

- Bitmap Graphics

- 3D Viewing Transformation

- 3D Computer Graphics

- Parallel Projection

- Orthographic Projection

- Oblique Projection

- Perspective Projection

- 3D Transformation

- Rotation with Quaternions

- Modelling and Coordinate Systems

- Back-face Culling

- Lighting in 3D Graphics

- Shadowing in 3D Graphics

- 3D Object Representation

- Represnting Polygons

- Computer Graphics Surfaces

- Visible Surface Detection

- 3D Objects Representation

- Computer Graphics Curves

- Computer Graphics Curves

- Types of Curves

- Bezier Curves and Surfaces

- B-Spline Curves and Surfaces

- Data Structures For Graphics

- Triangle Meshes

- Scene Graphs

- Spatial Data Structure

- Binary Space Partitioning

- Tiling Multidimensional Arrays

- Color Theory

- Colorimetry

- Chromatic Adaptation

- Color Appearance

- Antialiasing

- Ray Tracing

- Ray Tracing Algorithm

- Perspective Ray Tracing

- Computing Viewing Rays

- Ray-Object Intersection

- Shading in Ray Tracing

- Transparency and Refraction

- Constructive Solid Geometry

- Texture Mapping

- Texture Values

- Texture Coordinate Function

- Antialiasing Texture Lookups

- Procedural 3D Textures

- Reflection Models

- Real-World Materials

- Implementing Reflection Models

- Specular Reflection Models

- Smooth-Layered Model

- Rough-Layered Model

- Surface Shading

- Diffuse Shading

- Phong Shading

- Artistic Shading

- Computer Animation

- Computer Animation

- Keyframe Animation

- Morphing Animation

- Motion Path Animation

- Deformation Animation

- Character Animation

- Physics-Based Animation

- Procedural Animation Techniques

- Computer Graphics Fractals

Computer Graphics Tutorial

Computer Graphics simplify the process of displaying pictures of any size on a computer screen. Various algorithms and techniques are used to generate graphics in computers. This tutorial will help you understand how all these graphics are processed by the computer to give a rich visual experience to the user.

What is Computer Graphics?

Computer graphics, a dynamic field within computing, involves the creation, manipulation, and rendering of visual content using computers. It includes digital images, animations, and interactive graphics used in various sectors like entertainment, education, scientific visualization, and virtual reality.

Computer graphics can be used in UI design, rendering, geometric objects, and animation. It refers to the manipulation and representation of images or data in a graphical manner, requiring various technology for creation and manipulation, and digital synthesis and its manipulation.

Types of Computer Graphics

Computer graphics can be broadly categorized based on their applications, nature, or the way they are generated. Here are the main types of computer graphics −

- Raster Graphics (Bitmap Graphics) − Bitmap graphics are the images made up of tiny dots called pixels (picture elements).

- Vector Graphics − Images are created using mathematical equations to represent geometric shapes such as lines, circles, and polygons.

- 3D Graphics − Graphics that represent three-dimensional objects and scenes, often used for simulations, video games, and movies.

- Interactive Graphics − Graphics that allow users to interact with them, typically through a user interface (UI).

- Real-Time Graphics − Graphics that are rendered in real-time, meaning they are created and displayed instantly as the user interacts with them.

Basic Principles of Animation in Computer Graphics

The animation in computer graphics is nothing but a sequence of frames where each frame is generating a graphics output on the scene. Generally, not all frames are generated in a similar way.

The concept of interpolation is used for animation creation. These can be done with linear, quadratic or other interpolation types. There are several types of animation including key-framing, morphing, tween animations, etc.

Raster and Vector Graphics

Raster and vector graphics are two common types of digital images.

Raster graphics consist of tiny pixels; they are ideal for detailed and colourful images like photographs. On the other hand, vector graphics use mathematical paths for scaled designs like logos and illustrations.

Raster images use bit maps to store information, requiring a larger bitmap for larger files. Vector graphics, on the other hand, use sequential commands or mathematical statements to place lines or shapes in a 2-D or 3-D environment.

Pixel and Image Resolution

Pixels, derived from the term "picture element," are the fundamental unit of digital images, representing visual information. They consist of RGB subpixels, which mix to create diverse colors. Pixels form a raster image, with each pixel representing a specific position and color value.

In an image, how many pixels are there in row and in column manner that signifies the resolution. An image with large number of pixels inside it will be more accurate and detailed image as compared to lower number of picture elements.

What is Rendering in Computer Graphics?

The term rendering states visualizing the data. In computer graphics showing the generated graphics is rendering. However we generally use 3D rendering with the term "rendering".

3D rendering is a computer-based process that generates a 2D image from a digital 3D scene. It is essential in various industries like architecture, product design, advertising, video games, and visual effects.

Some of the rendering techniques are listed below −

- Scanline is a traditional rendering technique that calculates computer graphics surface.

- Z-Buffer is a two-dimensional data system used for depth calculation and storage.

- Shading and lighting manipulate light and dark levels using software effects.

- Texture / Bump Mapping displays color, material, and object details.

- Ray Tracing and Ray Casting are useful rendering techniques for creating natural lighting effects.

Who Should Learn Computer Graphics

This tutorial on Computer Graphics has been prepared for students who dont know how graphics are used in computers. It explains the basics of graphics and how they are implemented in computers to generate various visuals.

Prerequisites to Learn Computer Graphics

Before you start proceeding with this tutorial, we assume that you are already aware of the basic concepts of C programming language and basic mathematics.

FAQs on Computer Graphics

There are some very Frequently Asked Questions (FAQs) on Computer Graphics, this section tries to answer them briefly.

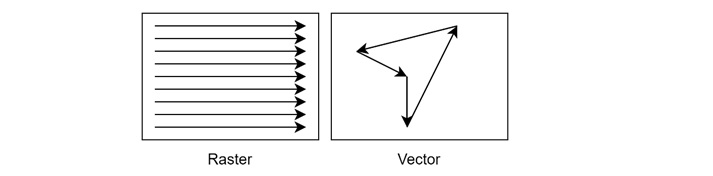

1. How do display devices like CRT and LCD work in the context of graphics?

CRT monitors use electron beams to glow up phosphor dots on the screen. Like this images are formed. On the other hand, LCD monitors use liquid crystals and a backlight to control light and form images.

Both types rely on the graphics card to render and send the image signal. CRTs use an analog signal, while LCDs use a digital signal. The graphics card and monitor must support the same resolution and refresh rate for proper display.

2. What is the role of linear algebra in computer graphics?

Linear algebra is like the math toolkit for computer graphics. It helps in creating and moving objects in 2D or 3D on the screen. For example, it allows us to rotate, scale, and shift objects. Think of it as the math behind making video games and animations look real.

Without linear algebra, it will not be possible to create the graphics. From the linear equation, we form matrices to solve the problems in action.

3. What is a Frame Buffer?

A frame buffer is a memory buffer in RAM that contains a bitmap that drives a video display. It represents all pixels in a complete video frame and converts an in-memory bitmap into a video signal for display on a computer monitor.

The buffer typically contains color values for every pixel, stored in 1-bit binary, 4-bit palettized, 8-bit palettized, 16-bit high color, and 24-bit true color formats. The total memory required depends on the output signal resolution and color depth.

4. How do Spatial Data Structures improve rendering efficiency?

Spatial data structures like grids, octrees, and BSP trees, help organize 3D objects in a scene. They make rendering faster by reducing the number of calculations needed to determine what is visible.

For example, instead of checking every object, the system only checks those in relevant areas. This speeds up processes like ray tracing, making graphics smoother and more efficient. Also for object which comes front or rear of some other objects, these data structures helps us a lot.

5. What is the significance of Color Depth?

Color depth, also known as bit depth, is the number of bits used to indicate the color of a single pixel or each color component of a single pixel. It can be defined as bits per pixel (bpp), bits per component, bits per channel, bits per color (bpc), bits per pixel component, bits per color channel, or bits per sample (bps).

Modern standards typically use bits per component, while historical lower-depth systems used bits per pixel more often. Color precision and gamut are defined through a color encoding specification.

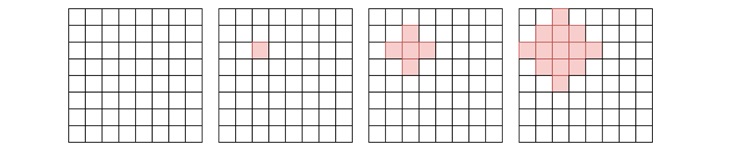

6. What is anti-aliasing, and why is it important?

Antialiasing is a technique in computer graphics that removes the aliasing effect, which results in jagged edges in rasterized images. This issue arises from undersampling, a distortion caused by low-frequency scan conversion.

Aliasing occurs when smooth, continuous curves are rasterized using pixels, and the cause of anti-aliasing is undersampling.

After applying anti-aliasing, the images turns to be smoothed and produces more clear lines which makes the picture much more effective in action.

7. What is the difference between 2D and 3D graphics?

2D and 3D graphics differ in their creation and outcome. 2D graphics consist of height and width, while 3D graphics add depth for realism.

2D graphics are commonly used in animation and video games, providing a flat view of movement on the screen. 3D graphics offer realistic depth, allowing viewers to see into spaces, observe light and shadow movement, and gain a fuller understanding of the content. They work with the brain's natural tendency to explore and enrich our understanding of the world.

8. What are Homogeneous Coordinates?

Homogeneous coordinates are fairly used in computer graphics. This serves as the basis for geometry used to display three-dimensional objects on two-dimensional image planes. They provide a standard for performing operations on points in Euclidean space, such as matrix multiplication.

These coordinate systems are used in two ways: by adding an extra value, and by solving problems in representing and implementing geometric object transformations. Most graphics are represented by matrices, which are applied to vectors in cartesian form.

What is Graphics Pipeline?

Graphics Pipeline is a framework in computer graphics that outlines the procedures for converting a 3D scene into a 2D representation on a screen. It converts the model into a visually perceivable format on a computer display.

A universally applicable graphics pipeline is not available due to its dependence on specific software, hardware configurations, and display attributes. Instead, graphics application programming interfaces (APIs) like Direct3D, OpenGL, and Vulkan standardize common procedures and oversee the graphics pipeline of a hardware accelerator.

9. What are Transformation Matrices in computer graphics?

A transformation matrix is a square matrix that represents a linear transformation in vector space. It transforms a coordinate system from one to another by maintaining the linearity attribute of the space.

The matrix carries coefficients, making it easier to compute and change geometrical objects.

$$\mathrm{\begin{bmatrix}a & b \\c & d \end{bmatrix} \: \begin{bmatrix} x \\ y \end{bmatrix} \: = \: \begin{bmatrix} x' \\ y' \end{bmatrix}}$$

For example, in a 2D coordinate system with coordinate vectors i and j, a transformation matrix T can transform a vector v = (x, y) into a vector w = (x', y'), creating a new coordinate system. The matrix's coefficients determine the directions of transformation and are used to find the vector w.

10. How do Translation, Scaling, and Rotation work in 2D / 3D transformations?

Translation, scaling, and rotation are key techniques used in 2D and 3D transformations to alter an object's position, orientation, and size.

We can form a matrix which adds x, y, and z parameters to translate to another coordinate.

Scaling resizes it in coordinate using scaling factors along the X, Y, and Z directions. So here with scaling matrix, we use the scaling factor for each axis.

For rotation, we use trigonometric functions with sine and cosine. Rotation changes an object's orientation around one or more axes, either around the center of the grid or along a line.

11. What is a View Matrix in graphics rendering?

The view matrix is a matrix used to convert vertices from world-space to view-space, typically concatenated with the object's world and projection matrix. This allows vertices to be directly transformed from object-space to clip-space in the vertex program.

If M represents the object's world matrix, V represents the view matrix, and P is the projection matrix, the concatenated world, view, and projection can be represented by MVP.

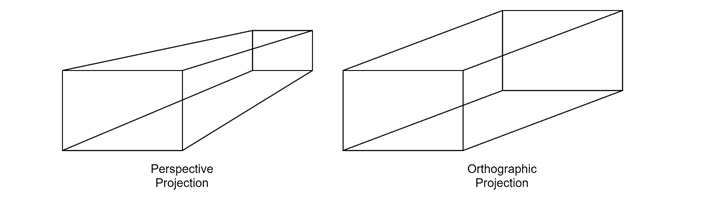

12. What is Perspective Projection? How is it different from Orthographic Projection?

Orthographic projections are parallel projections that can be represented by an affine transformation. Perspective projections are not parallel projections. They are useful for artistic and technical reasons, such as verifying part fit in floor plans or representing problems in different basis for easier coordinates.

In the following example, we can see for perspective projections, we are assuming the lines will meet at a point; but for orthographic, it will meet at infinite distance.

Orthographic projections are commonly used in CAD drawings and technical documentations to ensure dimensions are easy to measure and to represent problems in different bases for easier understanding.

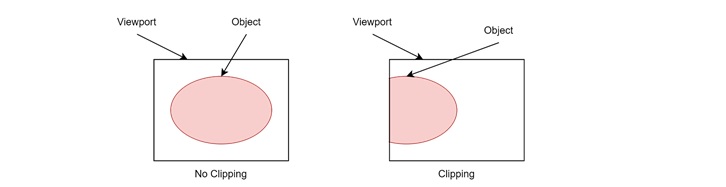

13. What is the concept of Clipping in graphics?

To display a large portion of a picture, scaling and translation are necessary, along with identifying the visible part. This process is challenging as some parts are partially inside, and partially visible lines or elements are omitted.

Clipping is a process used to determine the visible and invisible portions of each element, with visible portions selected and invisible ones discarded. There are various types of clipping, including line and polygon clipping.

14. What are vectors and how are they used in computer graphics?

Vector graphics are a versatile and scalable computer graphics approach that uses mathematical equations and geometric shapes instead of pixel-based raster graphics. They have no resolution limit and retain image quality at any size.

Graphic artists preserve their work as vector declarations. The most prevalent vector graphic format is SVG, and they are based on analytical or coordinate geometry.

Ivan Sutherland invented vector graphics in 1963 and developed the first vector graphics editor, Sketchpad, which allowed users to create and edit vector graphics on a computer screen.

15. What is Ray Tracing, and how does it differ from Rasterization?

Ray tracing is a technique used in real-time computer graphics to display three-dimensional objects on a two-dimensional screen. It involves observing the path of light beams from your eye to the objects that light interacts with.

Rasterization, on the other hand, is a technique used to display three-dimensional objects on a two-dimensional screen. It creates 3D models of objects from a mesh of virtual triangles, where each triangle's vertices intersect with other triangles of different sizes and shapes. This information, including position, color, texture, and "normal," determines the object's surface orientation.

16. What is the role of normal vectors in lighting calculations?

Normal vectors are used in lighting calculations for 3D graphics. They indicate surface orientation at each point. This is needed for light reflection and shading. These vectors are essential for calculating diffuse and specular reflections, creating realistic highlights, and implementing techniques like bump mapping.

In shader programs, normal vectors are key inputs for various lighting models. They allow for efficient creation of complex lighting effects without requiring excessive geometric detail, making them necessary for real-time 3D rendering and smooth lighting across surfaces.

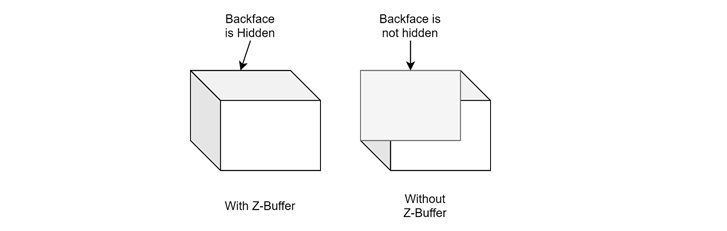

17. What is the significance of Depth Buffers in 3D rendering?

Depth buffers are crucial in 3D rendering for determining visibility of objects and surfaces. Without them, rendering order can cause incorrect layering of faces.

For a simple cube as well, if the system does not know proper ordering it will display each of the faces but they will be in any order and face hidden behind may be visible in front. To applying face culling, it needs depth buffer with it.

18. What is Bresenham's line-drawing algorithm?

Bresenham's line algorithm is a n-dimensional raster drawing algorithm that selects points to form a close approximation to a straight line between two points. It is commonly used for drawing line primitives in bitmap images, as it uses inexpensive operations like integer addition, subtraction, and bit shifting.

As an incremental error algorithm, it is one of the earliest in computer graphics. An extension of this algorithm is called the midpoint circle algorithm can be used for drawing circles.

19. What is Scanline Algorithm, and how is it used in raster graphics?

The Scan-line algorithm is an algorithm that is used in 3D graphics for hidden surface removal. This algorithm processes one line at a time, rather than processing one pixel at a time.

The Scanline algorithm examines points where the scan-line intersects polygon surfaces from left to right, performs depth calculations to identify hidden regions. Then, it updates color-intensity values in the refresh buffer if the flag of the corresponding surface is on.

20. What is Midpoint Circle Algorithm?

The midpoint circle algorithm is a method used in computer graphics to draw circles efficiently. It is an extension of Bresenhams line drawing algorithm. It works by determining which pixels should be colored to form a circle on a digital screen.

Starting from one point, the algorithm moves along the circle's circumference, deciding whether to move straight or diagonally to the next pixel. It makes these decisions by calculating the midpoint between two possible pixels. This approach avoids using complex trigonometric calculations, making it faster and easier to implement.

21. How does the Flood-Fill Algorithm work?

The flood ffill algorithm is a method used to fill multiple color boundaries in a region. It starts with a seed point inside the region and uses four or eight connected approaches to fill it with the desired color.

Flood-fill algorithm is more suitable for filling multiple color boundaries and interiors with one color. The fill algorithm starts at a specified interior point (x, y) and reassigns all pixel values to the desired color. It then steps through pixel positions until all interior points are repainted.

22. What is Texture Mapping?

A texture map is an image applied to the surface of a shape or polygon, either bitmap or procedural. They can be stored in image file formats, referenced by 3D models, or assembled into resource bundles. They can have one to three dimensions, with two dimensions being most common for visible surfaces.

Modern hardware may store texture map data in swizzled or tiled orderings for improved cache coherency. Rendering APIs manage texture map resources as buffers or surfaces, allowing render to texture' for additional effects.

23. What is the purpose of Z-buffer in 3D rendering?

Z-Buffer is nothing but a type of depth buffer and it stores the depth information of different objects in 3D scene.

Which object is present in front, which one in back these are determined by the Z-buffer. This is essential to represent 3D objects in correct way. Otherwise, some face which are not placed in back will come front and generate invalid images.

24. What is Gouraud Shading, and how does it differ from Phong Shading?

Gouraud shading calculates lighting at vertices and interpolates colors across polygons. This method is computationally efficient but can produce visible artifacts, especially with specular highlights, which may appear angular or jump between vertices as the view changes. The quality heavily depends on model complexity.

Phong shading, on the other hand, interpolates lighting parameters across polygons and calculates lighting per fragment. This approach produces smoother, more realistic results, particularly for specular highlights, which appear round and move fluidly across surfaces.

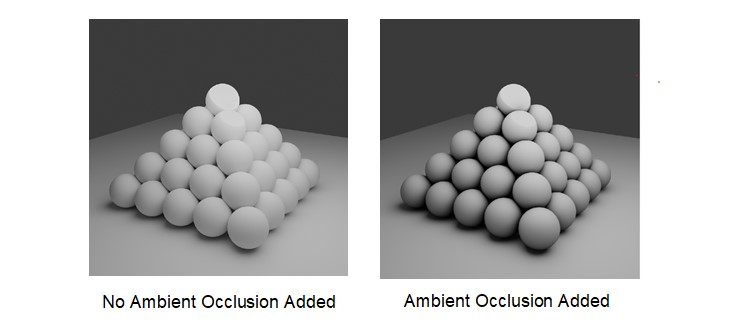

25. What is Ambient Occlusion, and how is it used in rendering?

Ambient occlusion is a type of lighting technique used in 3D graphics. This adds subtle shadows to make scenes look more realistic.

In the following figures, it will be clearer. It simulates how objects block light in the real world, darkening areas where objects are close together or in corners.

This technique calculates how much ambient light each surface point can "see," creating softer shadows in enclosed spaces. This significantly improves the overall visual quality and realism of 3D rendered scenes.

26. Differences between a Shader and a Texture?

Shaders and textures are two important components in 3D graphics. These are used in different purposes. Shaders are GPU-run programs that control rendering of 3D objects, influencing lighting, color, and special effects. Shaders can create complex visual effects and are highly flexible, allowing dynamic changes based on inputs.

Textures, on the other hand, are images applied to 3D models to add detail and realism, providing surface information like color patterns, roughness, or bump effects.

27. What is interpolation in computer graphics?

Interpolation techniques are used in computer graphics for animation creation. These are used to make smooth animation by taking two frames and generating intermediate frames for smooth transition.

There are several types of interpolations including linear, quadratic, etc. These can be used for movement rotation, scaling animations along with morphing and some other techniques like character animation (Walking, running) etc.

28. What is a Scene Graph?

In computer graphics, the scene graph is a data structure used in vector-based graphics editing applications and modern computer games to arrange a logical representation of a graphical scene. It consists of nodes in a tree structure, with the effect of a parent applied to all its child nodes.

In many programs, a geometrical transformation matrix is used to efficiently process operations, such as grouping related shapes and objects into a compound object that can be manipulated as a single object.

29. What is Global Illumination in computer graphics?

Global illumination (GI) is a set of 3D computer graphics algorithms that enhance the realistic lighting in 3D scenes. These algorithms consider both direct and indirect light sources including reflections, refractions, and shadows. They simulate these effects, affecting the rendering of other objects.

However, in practice, only diffuse inter-reflection or caustics are considered global illumination, as they simulate the effects of light rays from the same source on other surfaces in the scene.

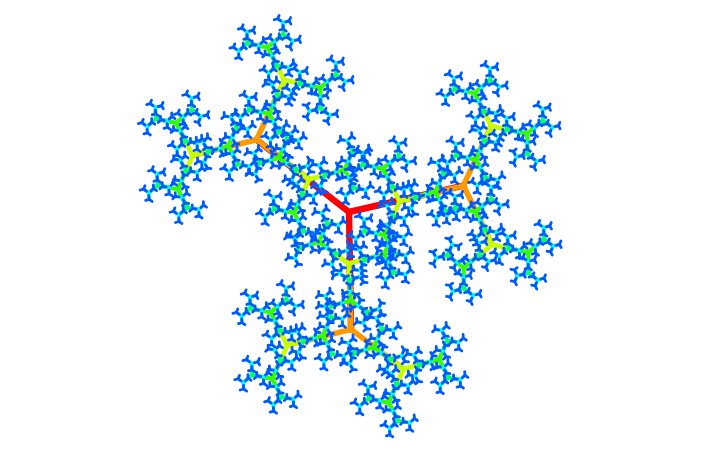

30. How do Fractals relate to computer graphics?

Fractals are complex images created by a computer using iterations, based on a single formula.

Geometric fractals are generated with non-integer shapes found in nature. These are created by starting with an initiator and replacing parts with a generator, resulting in a deterministic, non-random self-similar fractal.

31. How do graphics techniques differ for real-time vs Offline Rendering?

Offline rendering is a traditional method of creating images or animations by computing every pixel in a scene before displaying the final result. It uses powerful software like Autodesk 3ds Max, V-Ray, or Blender Cycles etc.

Real-time rendering, on the other hand, is a fast-paced method used in video games and interactive applications, and is increasingly gaining popularity in architectural visualization.

32. What is a GPU, and how does it accelerate graphic rendering?

A GPU is a specialized circuit that efficiently processes and manipulates computer graphics and image data. This offloads compute-intensive tasks from the CPU, enabling the CPU to handle more general computational workloads.

GPUs are used in gaming and 3D graphics rendering. It helps graphics processing by real-time rendering of complex environments and high-resolution textures, handling transformations, vertex generation, pixel shaders, antialiasing etc.

33. What is OpenGL, and why is it widely used in graphics development?

OpenGL is an API related to graphics. That is used to communicate with graphic hardware (GPU). It provides a graphical representation of an application.

OpenGL allows for 2D and 3D vector graphics to be rendered through hardware acceleration, making it widely used in VR, CAD, and games. OpenGL is cross-platform and language-independent.

34. How does DirectX compare to OpenGL?

OpenGL and DirectX are not similar in terms of their functionality. DirectX is a suite of APIs, including Direct3D and Direct2D, which are only compatible with Microsoft platforms like Windows and Xbox. OpenGL is cross-platform and compatible with Microsoft, Apple, and Linux systems.

Apple has deprecated OpenGL; however on macOS and iOS, it is still supported. In terms of gaming frame rate and system resource use, OpenGL and DirectX 11 are almost on par, with little noticeable difference in most cases.

35. What is the concept of LOD (Level of Detail) in 3D rendering?

Level of detail (LOD) in computer graphics says the complexity of a 3D model representation. It can be decreased as the model moves away from the viewer or based on metrics like object importance or viewpoint-relative speed.

LOD techniques increase rendering efficiency by reducing workload on graphics pipeline stages, usually vertex transformations. LOD is often applied to geometry detail but can be generalized to include shader management and mipmapping for higher rendering quality.

36. What is a Game Engine, and how does it relate to computer graphics?

A game engine is software that helps create video games. It handles many tasks like rendering graphics, processing physics, managing audio, and handling user inputs.

In computer graphics, the game engines graphics engine is important. It renders 2D and 3D visuals, ensuring smooth and realistic images. This allows game developers to focus on designing the game rather than building graphics from scratch.

Some of the popular game engines include Unity, Unreal Engine, and Godot.

37. What are some common optimization techniques used in real-time graphics?

Optimization techniques are used to enhance the performance and make the system faster in different applications by removing unnecessary computations. There are many such optimization techniques, including the following −

- Instancing − Reuses the same object multiple times with different transformations, reducing the number of draw calls.

- Culling − Skips rendering objects not visible to the camera, saving processing power.

- Level-of-Detail (LOD) Management − Uses simpler models for distant objects to maintain performance without sacrificing visual quality.

38. How do graphics techniques for VR differ from traditional 3D graphics?

The technology for VR is different. In VR, we use stereoscopic images where two images merge together to get a realistic 3D view. On the other hand, traditional 3D images are just 2D render, where no actual 3D feeling comes.

We can say VR graphics differ in terms of immersion, performance, field of view, and interaction. VR aims for a fully immersive experience. Traditional 3D graphics focus on visual representation. High frame rates and low latency are required for smooth interaction, while a wider field of view mimics human vision.